OutSystems AI for Apps and Agents

OutSystems + GenAI: Our journey to community translation

Sandra Fernandes December 19, 2023 • 7 min read

This might interest you

Subscribe to the blog

By providing my email address, I agree to receive alerts and news about the OutSystems blog and new blog posts. What does this mean to you?

Your information will not be shared with any third parties and will be used in accordance with OutSystems privacy policy. You may manage your subscriptions or opt out at any time.

Get the latest low-code content right in your inbox.

Subscription Sucessful

Note: This blog was a joint effort with Ricardo Sequeira, OutSystems Digital Team Lead.

The OutSystems Developer Relationship team just finished a massive transformation of the user experience for our Japanese community members (our 2nd largest developer population in the world) with generative AI. The story is one of trial and error that ranges from the “art of the possible” to mapping and implementing the technology needed to make it a reality.

In this blog article, we share why we embarked on this project and tell the story of the journey that took us into the world of generative AI.

Giving up is not an option: The why

We want the members of the OutSystems Community all around the world to have the same opportunity to share their needs, questions, and comments and get support. Unfortunately, this has not been the case for the Japanese developers. Posts like this were frequent, with a similar answer, until people gave up.

We decided that "giving up" should never be an option. It was time to enable Japanese-speaking developers to be part of Community conversations.

Bringing Japanese-speaking developers into the conversation: The how

OutSystems has a long history of using automation to improve developer productivity, and we have been integrating AI into the OutSystems platform for years. So, we knew we had the AI experience and tools to offer our Japanese customers a better experience.

Selecting the model and prompt engineering

In a sea of different large language models (LLM), how do you choose the one that’s best for your purpose? Our selection process involved evaluating four LLMs against Google Translate, as well as prompt engineering.

Our LLM evaluation was based on three parameters:

- Quality of translation: Native Japanese speakers rated the translations.

- Performance: Using translation samples, we recorded the average and maximum speed of each LLM response

- Costs: With a sample, register the total tokens (completion + prompt) to reach the average cost according to each model pricing

In the first evaluation iteration, we excluded two LLMs, Claude 2 and Amazon Titan, because of the languages the prompt supported and the translation rating threshold. (We want to unlock the AI translation for more languages in the future.) Plus, in terms of performance for a real-time translation, Amazon Titan did not meet our minimum criteria.

For prompt engineering, we used a playground that allowed the language, text, and LLM model to vary. Playgrounds enable organizations to explore and learn about generative AI without coding–companies as well-known as Walmart and CBRE are using them internally for specific use cases. For us, the playground made testing and iterating a reality with no need for extra development. The focus was to improve the quality of translation and to prevent LLMs from adding extra information (for this the temperature was also set as 0). Here is an example of a prompt we used:

You will now translate this text written in a forum post by the Outsystems community to {LANGUAGE} without including any additional explanations or instructions. Only reply to the translation, keeping the same meaning, tone, formatting, and taking into consideration the following forum post context: {CONTEXT}. If a term or expression can be interpreted in an IT and non-IT context, use the IT context. Do not translate OutSystems, generic software development technical references, or URL links. Keep all the HTML code as is.

A new sample that better mimicked the complexity and HTML properties of actual forum posts was used for the final interaction. The translation quality was evaluated against Google Translate, and the cost of the models was also considered. Still, syntax and semantic errors in the source text (in English) propagated to the Japanese translation. To avoid misunderstanding, the translation was divided into two steps: correct the English text and perform the translation to Japanese.

For now, despite its cost, ChatGPT4 has the most suitable LLM for translating developer content. It was curious to see how more technical text with HTML included changing some of the previous results.

Diving deep into integration: AI and ODC

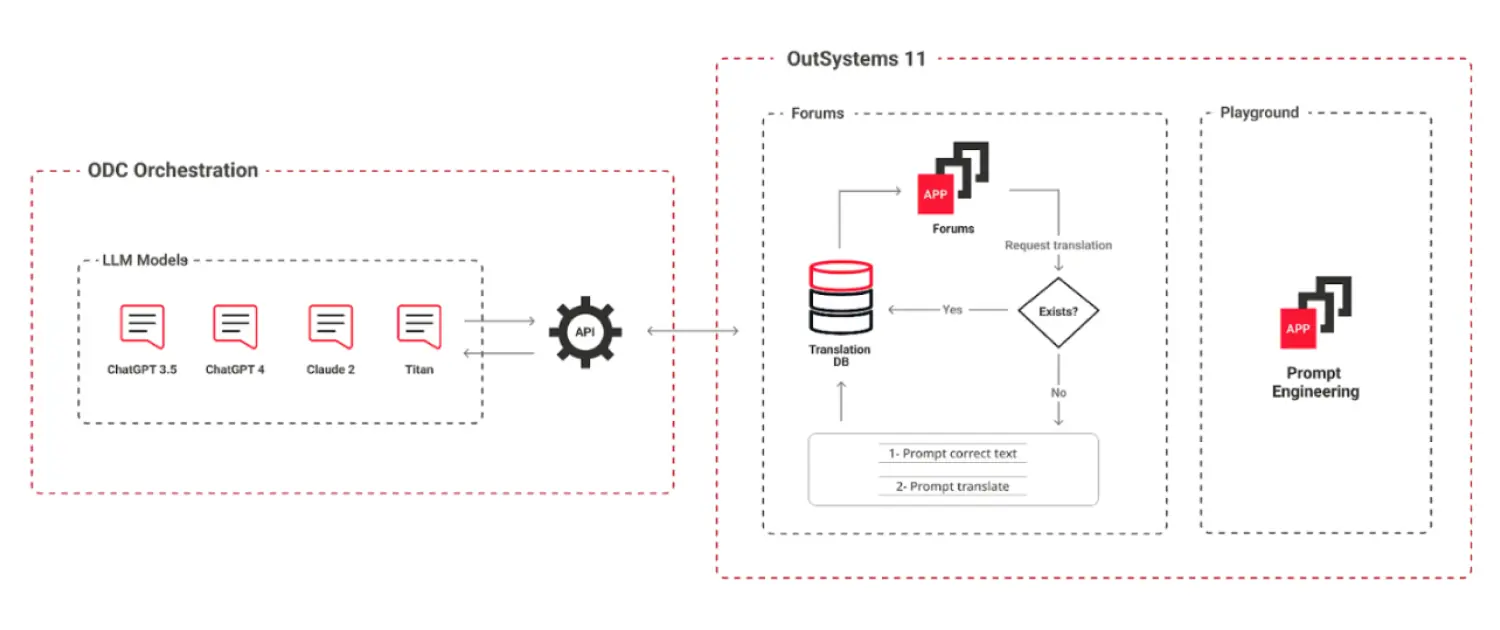

The forum translation is a use case of generative AI + OutSystems Developer Cloud (ODC) + OutSystems 11 (O11). At the foundation level, an ODC orchestration occurs. The ODC orchestrator acts as a composable piece that allows any OutSystems team to build their AI use case. The translation use case relies on API integration to AI models and a token count to estimate costs.

We connected our Community Forums (O11) to an API built on ODC that connects to different LLMs, returning the desired translation in real time. The translation use case only takes advantage of the integration with AI and the token count, but the orchestrator has additional functionalities necessary for use cases of Retrieval Augmented Generation (RAG). RAG is an AI framework for retrieving facts from an external knowledge base to ground large language models (LLMs) on the most accurate, up-to-date information and to give users insight into the generative process of LLMs.

For now, the complete set of orchestration capabilities consists of:

- Integration with AI models: Integrations to call ChatGPT 3.5, ChatGPT 4, Claude 2, and Titan.

- Embedding: Integration with GPT embedding. Embeddings are dense vector representations that capture the semantic meaning of words.

- Token count: Feature to count tokens generated. Tokens are the basic units of text or code that an LLM uses to process and generate language. Tokens can be characters, words, subwords, or other segments of text or code.

- Vector database: Integration with Pinecone. A vector database is a type of database that indexes and stores vector embeddings for fast retrieval and similarity search, with capabilities like CRUD operations, metadata filtering, and horizontal scaling.

- Access management: Access control to specific models and content according to the AI use case.

We developed logic to cache new translations to minimize the number of calls (and therefore costs) inside forums. The logic allows us to show cached translations and only call the API for new translations. So, if developer A requests the translation of a post for the first time, that translation is cached and subsequent developers who request the translation of the same forum post will see the cached translation.

Lessons learned throughout our journey

The combination of our process and our experience during the implementation taught us some valuable lessons:

- LLM models are currently mature enough to be used for translations.

- A playground for prompt engineering is where the magic happens, and it reduces time-consuming processes.

- Correcting text grammar before translating leads to more quality translations, and AI can be used to do this.

- We can store and cache translated articles to save costs and accelerate results.

What’s next: No barriers, one community

Because the Developer Relations Team embraces the OutSystems culture of innovation and continuous improvement, we continue to evolve this project. These are our next steps:

- Allow rating and feedback to improve the quality of the translation.

- Introduce new languages to the AI translation.

- Enable the search of forum content in several languages.

The most fulfilling step of the journey is the confirmation that our Japanese-speaking community is having a much better experience. Since November 6, the day we launched AI translations, more than 6200 forums have been translated from and into Japanese. From an IT perspective, everything is ready to remove language friction: No barriers, ONE Community.

It takes a village to make sure community members can participate in their own languages. So, Ricardo and I would also like to thank the teams that made this project happen:

- Frontend: Diego Almeida and Iuri Barreiro Silvestre

- AI core: Joao Araujo

- Community: Joao Pedro Costa, Susana Oliveira, Jonathan Ghalmi, Ricardo Toucedo. Nuno Delgado, Filipe Umbelino, Vanessa Sousa

- Marketing: Miguel Marques and Sofia Marques

- Content reviewers and model testers: Akiko Yato, Akira Hirose, Masa(yuki) Hyugaji, Masae Sasano, Tomofumi Sugiyama, and Gao Yuan.

To see our translation in action or share in our forums, visit or join the OutSystems Community today.

Sandra Fernandes

Sandra is an accomplished professional with over 20 years of experience in IT. With an Engineering background and solid experience in consulting, project management and pre-sales, she has a passion for delivering great value fast with the OutSystems platform, agile methodologies, and happy teams. Sandra is currently applying her enthusiasm and expertise to make sure our Community members have the best possible experience in their journey, learning, evolving and sharing their success.

See All Posts From this authorRelated posts

Forsyth Alexander

November 07, 2024 6 min read

Forsyth Alexander

August 11, 2023 6 min read

Takin Babaei

February 02, 2024 3 min read