AI-driven development with OutSystems Mentor

AI innovation at OutSystems: Unveiling hidden success

Alexandre Duarte de Almeida Lemos April 17, 2025 • 5 min read

This might interest you

Subscribe to the blog

By providing my email address, I agree to receive alerts and news about the OutSystems blog and new blog posts. What does this mean to you?

Your information will not be shared with any third parties and will be used in accordance with OutSystems privacy policy. You may manage your subscriptions or opt out at any time.

Get the latest low-code content right in your inbox.

Subscription Sucessful

For over half a decade, OutSystems has been a leader in embedding artificial intelligence (AI) into its platform, changing the way developers create and deliver software. From automating tedious software development lifecycle management (SDLC) tasks to natural language app generation and interactive development, OutSystems consistently pushes the boundaries of innovation. With the introduction of Mentor in OutSystems Developer Cloud (ODC), we have evolved AI into a fundamental partner in the development process, boosting productivity, ensuring higher quality, and allowing developers to concentrate on the strategic and creative aspects of their work. This blog explores some of the award-winning AI innovations in Mentor code quality.

Mentor code quality

OutSystems relies on AI and Mentor to enforce adherence to SDLC best practices and identify issues early. The code quality console highlights the findings from Mentor, which automatically runs comprehensive code reviews every twelve hours to detect issues such as unused code and other problematic patterns. Using advanced AI algorithms, Mentor analyzes code in-depth across key dimensions—maintainability, security, and performance.

Mentor uses a custom query language for identifying bad code patterns, which allows experts to define specific patterns they want to detect. This query language incorporates methods such as dataflow analysis.

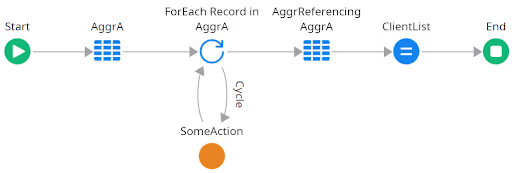

Since OutSystems code is structured as a graph, capturing patterns requires more than just tracing paths between objects. For example, inefficient queries inside loops can lead to significant performance issues in OutSystems code.

Figure 1. Inefficient queries inside loops

We could search for queries inside a loop where the loop uses the result of another query. However, if we do this programmatically, we could miss the cases where other logic elements exist between the queries and the cycle. We could use regular path queries, but we might encounter arbitrarily complex structures. For example, this should not be matched.

Figure 2. Regular path queries

For this reason, we created Regular Graph Pattern (ReGaP), a method for specifying graph patterns using a graph-based search. This technique expands regular expressions to graphs, allowing us to specify sequences of nodes or arbitrary sub-graphs. Here is an example of how we use this approach to search for inefficient queries in a loop.

Figure 3. Regular Graph Pattern used to search for inefficient queries

In this case, we want to find all queries inside a loop that iterates the result of the first aggregate. This pattern results in N+1 queries when a single optimized query would suffice.

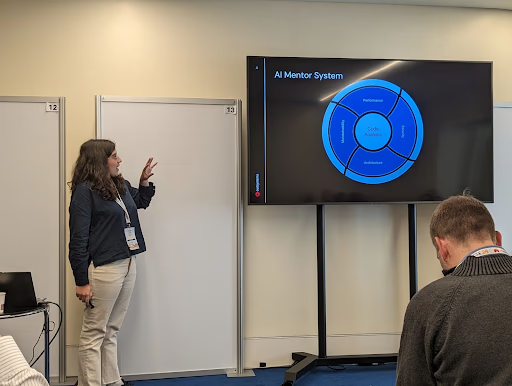

While this approach was developed for OutSystems code, it is generic enough to be applied to high code. The relevance of this work was validated through a research paper accepted at the AAAI Conference on Artificial Intelligence. AAAI is an A*-rated conference where practitioners and researchers exchange insights. Presenting our work allowed us to engage with experts from other low-code companies, reinforcing the impact of our approach. We use automated reasoning to search these patterns in the code.

Figure 4. OutSystems at AAAI

More AI magic: Managing technical debt

This work is just part of the AI magic that happens behind the scenes of Mentor. Beyond this research, we also shared insights on managing technical debt since the original release of OutSystems AI capabilities in 2017.

In 2024, Portugal hosted ICSE, one of the largest software engineering conferences. OutSystems could not miss the opportunity to showcase our advancements in software, particularly in the low-code field.

Figure 5. Presenting (and hosting) at ICSE

A key takeaway from our presentation was the discrepancy between technical debt weights assigned by users versus experts. The average weight of security patterns increased significantly while some more subjective maintainability patterns reduced. Our discussion with researchers and companies like Google, Microsoft, SonarCloud, and JetBrains highlighted the importance of refining these metrics.

Tired of reading? Well, try to hang on, because I still have another exciting story to cover. I’ve talked about generating applications with artificial intelligence and analyzing code with AI, so what is missing?

Low-code test generation

Testing is a crucial part of software development, but it can be cumbersome to create and maintain. OutSystems provides a BDD framework that allows developers to use natural-language sentences to define expected software behaviors. This is already a useful abstraction. However, we took it a step further by using AI for test generation.

Rather than relying on generative AI alone, which lacks guarantees on coverage and correctness, we developed BugOut, a symbolic execution approach tailored for the low-code properties of OutSystems. Symbolic execution runs code without specific values, extracting constraints for each execution path.

To validate BugOut, we compared it with state-of-the-art solutions, including Klee, ExposeJS, JSFuzz, and OpenAI's GPT-3.5. This presents a comparative analysis of the time spent to generate the best set of tests.

| Tool | Min | Max | Mean | Median |

|---|---|---|---|---|

|

BugOut |

0.0001 |

350.9 |

0.03 |

0.02 |

|

ExposeJS |

34 |

1800 |

698 |

317 |

|

JSFuzz |

59 |

1800 |

487.8 |

220 |

|

GPT-3.5 |

10 |

45 |

19.8 |

14.5 |

|

Klee |

1 |

375 |

15.6 |

1 |

BugOut and Klee achieved 100% edge coverage, while ExposeJS, JSFuzz, and GPT-3.5 had mean edge coverage of 73.58%, 58.8%, and 74.7%, respectively. Additionally, GPT-3.5 produced an average of 5.4% of tests with errors.

Our novel approach was published at the IEEE International Conference on Software Testing, Verification, and Validation (ICST, rank A).

Figure 6. OutSystems at the IEEE International Conference on Software Testing

OutSystems was also invited to participate in an expert panel on AI-driven software testing.

Figure 7. Participating in the panel called “The Role of AI in Software Testing”

Why stop at unit tests? UI testing remains a significant challenge. While we have tackled test generation and code analysis, we continue to explore new frontiers. Stay tuned—we have more in the works!

Consistently pushing the boundaries of AI innovation

While you’re waiting for the next installment of our AI success stories, check out this overview of AI at OutSystems.

Alexandre Duarte de Almeida Lemos

Alexandre Duarte de Almeida Lemos is a Senior Research Scientist at OutSystems. Certified in Generative AI with Large Language Models, he also holds a Ph.D. and Master’s Degree in Information Systems and Computer from Instituto Superior Técnico in Lisbon. He was instrumental in the development of OutSystems Mentor and is the author and coauthor of 15 peer-reviewed scientific publications and shares a patent and has other patents pending with a team of OutSystems innovators. Before he joined OutSystems in 2021, he was an instructor at Instituto Superior Técnico.

See All Posts From this authorRelated posts

Rodrigo Coutinho

April 10, 2025 3 min read

Takin Babaei

July 31, 2024 6 min read

Praveen Kumar Natarajan

November 19, 2024 8 min read