2. AI-powered applications

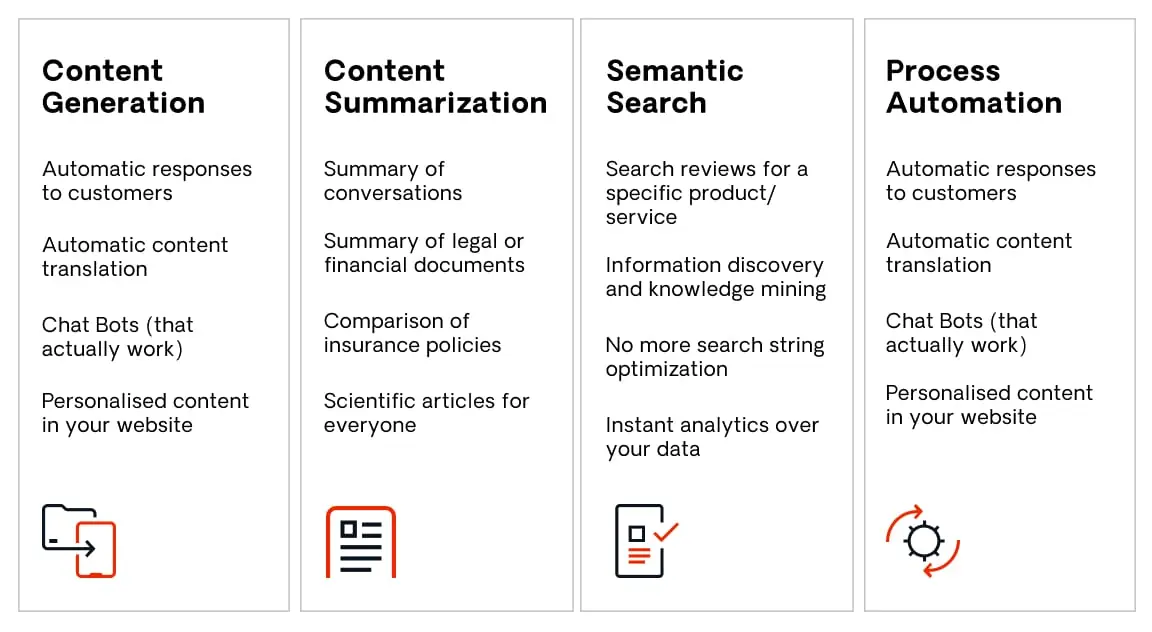

Not only is the OutSystems platform infused with AI, but it also offers developers the opportunity to add artificial intelligence to the applications they build. Below are just some examples of what OutSystems customers are currently building in terms of AI-powered applications.

Table of contents

- Overcoming barriers to AI and GenAI integration in applications

- Infusing generative AI into your applications - AI Agent Builder

Overcoming barriers to AI and GenAI integration in applications

The potential of integrating GenAI into applications is enormous. However, adopting these advanced capabilities is challenging. It requires specialized AI expertise, which can be costly and scarce, along with complex, resource-intensive, and time-consuming modifications to legacy systems. In addition, integrating multiple data sources to ensure the relevance, accuracy, and quality of AI responses adds another layer of difficulty.

The OutSystems low-code platform removes these obstacles to building GenAI-infused applications. With OutSystems, developers can easily integrate advanced language models from multiple providers directly into their apps, all without requiring specialized AI knowledge. Prebuilt use cases and connectors created by the OutSystems Community can enrich existing applications with powerful AI capabilities (a full list of AI and Machine Learning connectors is available in the Forge).

Infusing generative AI into your applications - AI Agent Builder

With AI Agent Builder, powered by Mentor, IT teams can integrate generative AI into applications and operations with speed and security. Out-of-the-box integrations with cutting-edge large language models (LLMs) available from Microsoft Azure, Amazon Bedrock, and Meta make it possible to build AI agents—even from custom models—with minimal effort. When embedded directly into OutSystems applications, agents deliver natural language interactions that respond to user inputs or perform automated actions.

To enable the agents to provide smarter, more contextual responses, LLM capabilities are integrated with retrieval-augmented generation (RAG), which enables them to incorporate an organization’s own data. For example, a customer service AI agent can automatically address customer inquiries based on your organization’s knowledge base, documentation, and transaction histories.