Make AI Your App Development Advantage: Learn Why and How

Agentic AI, robots, and humans: Can we all get along?

Rodrigo Coutinho June 25, 2025 • 7 min read

This might interest you

Subscribe to the blog

By providing my email address, I agree to receive alerts and news about the OutSystems blog and new blog posts. What does this mean to you?

Your information will not be shared with any third parties and will be used in accordance with OutSystems privacy policy. You may manage your subscriptions or opt out at any time.

Get the latest low-code content right in your inbox.

Subscription Sucessful

Picture this: You're rushing between meetings, and your AI agent notices an important appointment coming up. Without your asking, it sends you a briefing about who you're meeting, schedules a follow-up, and even suggests talking points based on your previous conversations. This isn't science fiction anymore. It's agentic AI, and it's changing how we work.

But here's the thing most people get wrong about agentic AI. They think it's just ChatGPT with extra steps. It's not. Agentic AI is fundamentally different from generative AI because it doesn't wait for you to start the conversation. In this blog, I explain agentic AI from my perspective and look at its implications for the future.

TL;DR: Don’t have time for this? Watch me break it down in this video.

What makes agentic AI actually agentic

Agentic AI is all about having agency. When ChatGPT first appeared, the interaction was simple: you ask a question, it gives you an answer. You started the interaction and got a reply. Agentic AI goes further and also takes the initiative.

The key difference is autonomy. Instead of waiting for your prompt, agentic AI can monitor your calendar, recognize patterns, and act on your behalf. It can check what's happening with your agenda and proactively send you information you need. The purpose of agentic AI is to do things on your behalf and accelerate your workflow.

But here's where it gets interesting. Agents can make decisions autonomously, but you control how much freedom they get. You can either give agentic AI full power to act, or you can put breakpoints where a human decides if the action should be taken.

The level of autonomy depends on how serious the consequences are. If an agent is sending you a meeting briefing? That's fine to automate. But if it's sending an email to your customer? Maybe you want to review that first. It really depends on how you want to do things and how serious the consequences of the action are.

Can agents actually reason?

The answer depends on your definition of reasoning. These models use statistical algorithms to come up with answers, and it seems like they're reasoning. It's not exactly the same type of reasoning we apply to humans, but it is a type of reasoning.

Where this becomes powerful is in transparency. People were trusting AI answers without understanding the steps that led to those answers. So the models evolved to explain exactly why they reached a specific solution. They can show you the information they accessed, the steps they took, and the sources they used.

There are models you can ask to explain exactly what steps they took to reach an answer, including their information sources. In that sense, we can say they reason because they use multiple sources, algorithms, and information to reach conclusions.

This reasoning capability is already being used across multiple applications. The most common one people talk about is coding, where developers use these agents to build applications faster. But there are other scenarios too. In translation, these models help convert documents between languages. In legal work, they analyze legal documents because they can take large amounts of information and summarize it into something easy to digest and understand.

How agentic AI is reshaping development

Agentic AI is completely reshaping the developer experience. It started simple: instead of searching the internet for answers to specific problems, you could just ask ChatGPT how to do something. It gives you code examples that aren't perfect, but they're good enough to get started. Instead of starting from zero, you're starting at 40 to 60 percent complete.

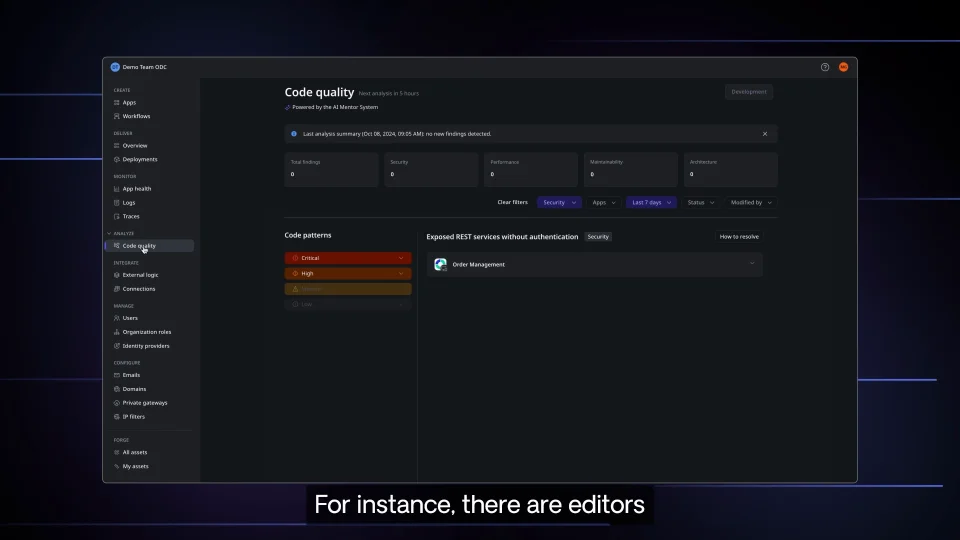

Now there are agentic tools and AI agents that take actions for you. Some editors look at your source code, determine if they believe you need refactoring, and can do it automatically without intervention.

This means the developer role has changed. Before, it was all about typing, writing code, debugging, and making sure everything worked. Now it's more about validating the code that AI produces. Even junior developers using these tools are asked to behave more like lead developers. They do code reviews, validate AI work, and effectively manage a team of AI bots doing work for them.

The human work isn't going away soon. There are still many things humans need to do. For example, AI models only work on top of what already exists. If you want something truly creative and unique, you still need a human. Also, humans are needed for validation, ensuring security, and making sure everything matches company guidelines. But those nitpicky details of typing code? They're disappearing. Developers will still be there, but their job role will be different.

Beyond coding: Where else agentic AI works

The possibilities for agentic AI in our daily lives are enormous. There are many tasks we do daily that have nuances, and agentic AI seems good at dealing with uncertainty and natural communication. You can say a sentence without explaining exactly what you want, and it understands from context what you're asking. It's almost human communication.

We already use this with Alexa and similar devices, but it can go much further.

Robot dreams

The most exciting application is in robotics, especially for assisted care and living for the elderly. Having robots powered by agentic AI opens possibilities for people who aren't independent due to physical disabilities. An agent that can help them move around the house and be independent from other caregivers could be very powerful.

The everyday automation we're still waiting for

Here's something that frustrates me: I hate doing dishes. It seems like this should be automated by now, but humans still need to do it. It's related to small variances like different dish sizes, machine sizes, and kitchen layouts. This type of adaptation challenge will be interesting to see how far we can go in making systems adapt to environments where everything is different from one place to another.

Of course, agentic AI in robots needs serious safeguards to ensure there are no issues, that it truly understands what needs to be done, and that it doesn't endanger any people or animals.

Evaluating agentic AI performanc

There are several ways to evaluate the performance of an agentic system. The most basic is unit testing. You can test agents with parameters, and because this is AI, answers will vary. It's not deterministic, but you can ensure it stays within given patterns.

For instance, if you're using AI to generate code, you can try compiling it to see if it works. If you want to generate an email, you can ensure it doesn't contain competitors' names. You can do these types of checks.

But the most important evaluation is how you benefit from implementing these agents. That's monitored through return on investment. If your goal is accelerating your development team, evaluate performance before and after implementing agents. Same thing for marketing teams trying to accelerate content creation.

This validation is still done by humans, but you can also have machines validating other machines. You can use other agents to validate agents. For example, I can create an agent that checks if another agent's output contains inappropriate content. That's a well-known technique used frequently.

The future: More proactive, less reactive

I think agents will become more proactive in the future. Right now, it's mostly about asking them for something and getting a response. You can simulate automation by triggering them periodically, but things are still pretty reactive.

It would be interesting to see these technologies become more proactive to environmental changes. If something changes, like a glass breaking at home or a market shift companies aren't aware of because they're not watching Bloomberg all day, having the ability to be proactive and take action would be fascinating.

Agent-to-agent communication will also evolve. Right now you can do that, but it's explicit. Having that be implicit would be interesting. I know there's Skynet danger when that happens, but with all that power, the types of solutions that could emerge would be fascinating.

Why I don't want sad robots

I'm not sure about emotions in AI agents. Emotions are amazing in humans, but I can see them being dangerous in something autonomous, especially robots. I explain why in this video:

I prefer robots to be less emotional and more analytical, while we humans are the artists with emotions who see the world from that perspective. That's how we all get along: by doing what we're each best at.

I see a future full of partnerships where agentic AI handles the analytical heavy lifting while humans bring creativity, emotional intelligence, and strategic thinking to the table. And maybe, just maybe, someone will finally solve the dishes problem.

Rodrigo Coutinho

A member of the founder’s team, Rodrigo has a passion for app development, great products, and geeky stuff. He spends his time designing future versions of the OutSystems platform and dreaming about the cool future of software development.

See All Posts From this authorRelated posts

Forsyth Alexander

June 24, 2025 7 min read

Rachel Sobieck

April 04, 2025 5 min read

Paulo Rosado

March 27, 2025 5 min read